梯度下降

\(w_{t+1} = w_t-\eta \bigtriangledown w_t\)

随机梯度下降SGD

动量法

Nesterov

AdaGrad

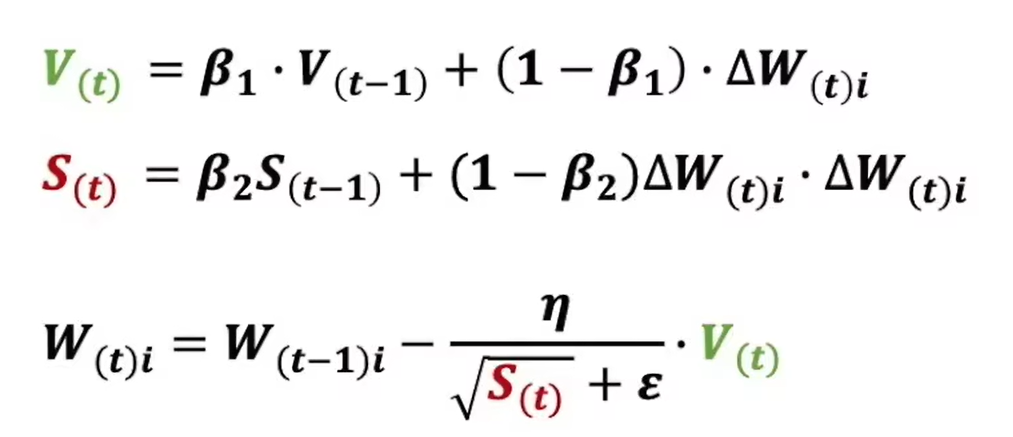

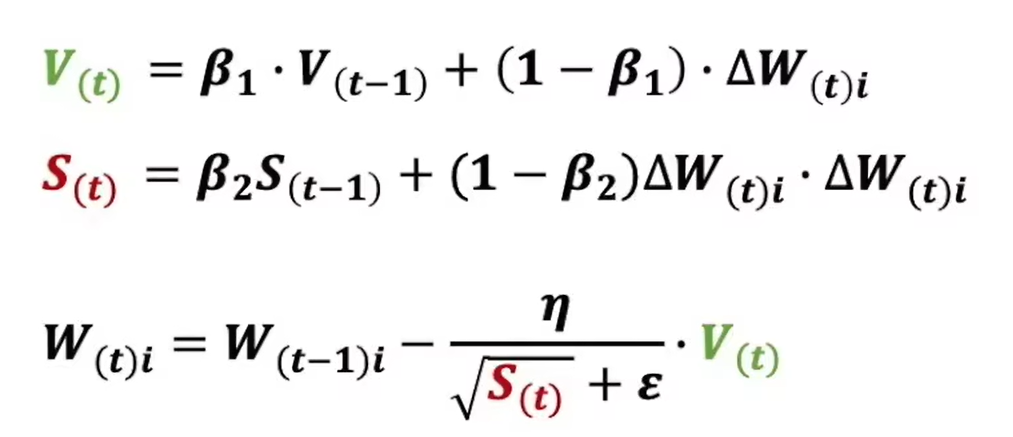

RMSprop

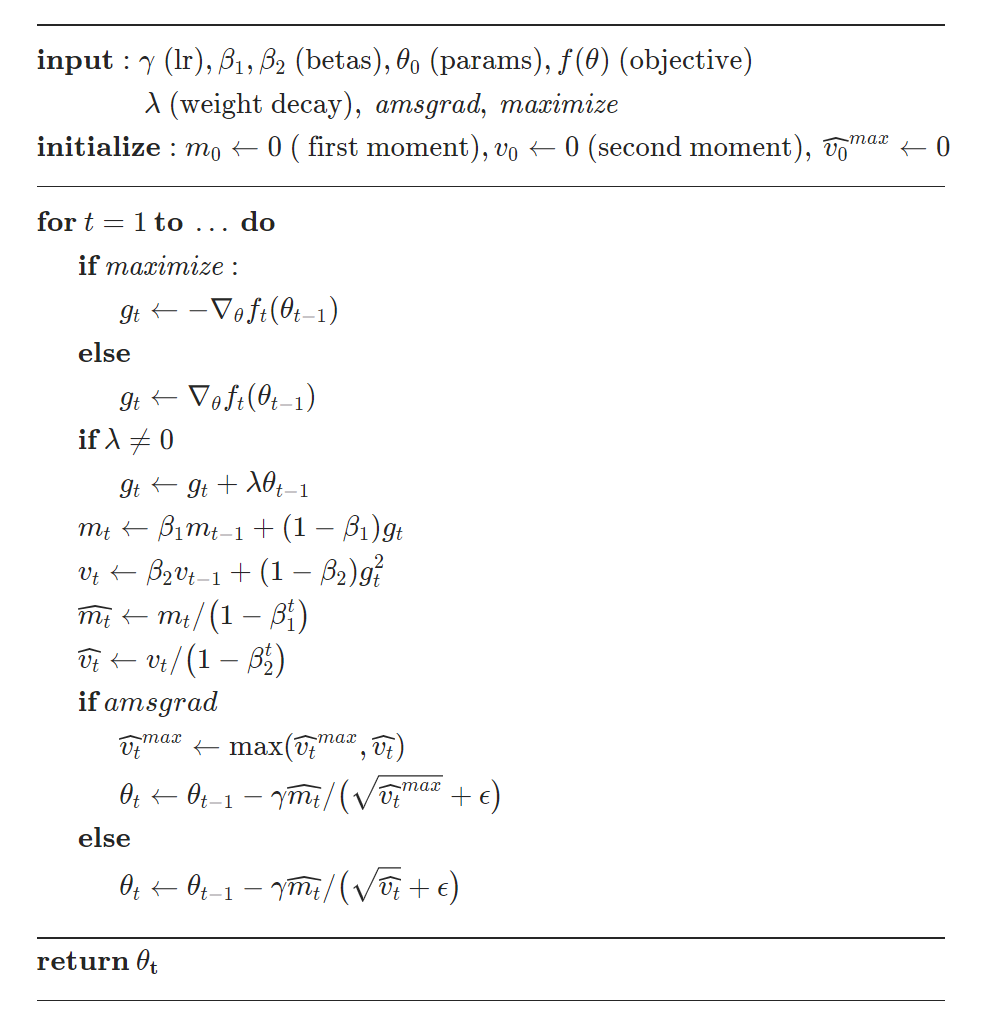

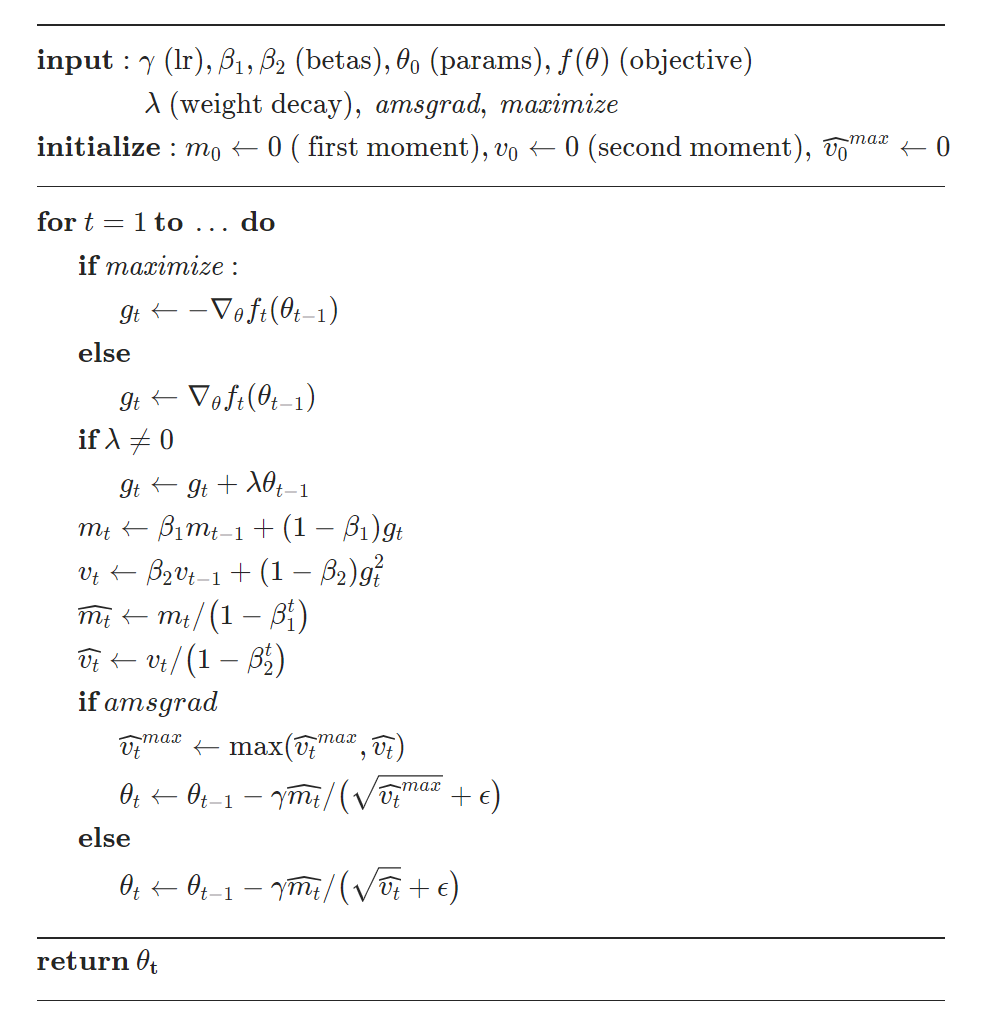

Adam

https://pytorch.org/docs/stable/generated/torch.optim.Adam.html

\(w_{t+1} = w_t-\eta \bigtriangledown w_t\)

https://pytorch.org/docs/stable/generated/torch.optim.Adam.html

Author: zoyass

Permalink: https://www.zoyass.site/2024/04/13/%E4%BC%98%E5%8C%96%E7%AE%97%E6%B3%95/

License: Copyright (c) 2019 CC-BY-NC-4.0 LICENSE